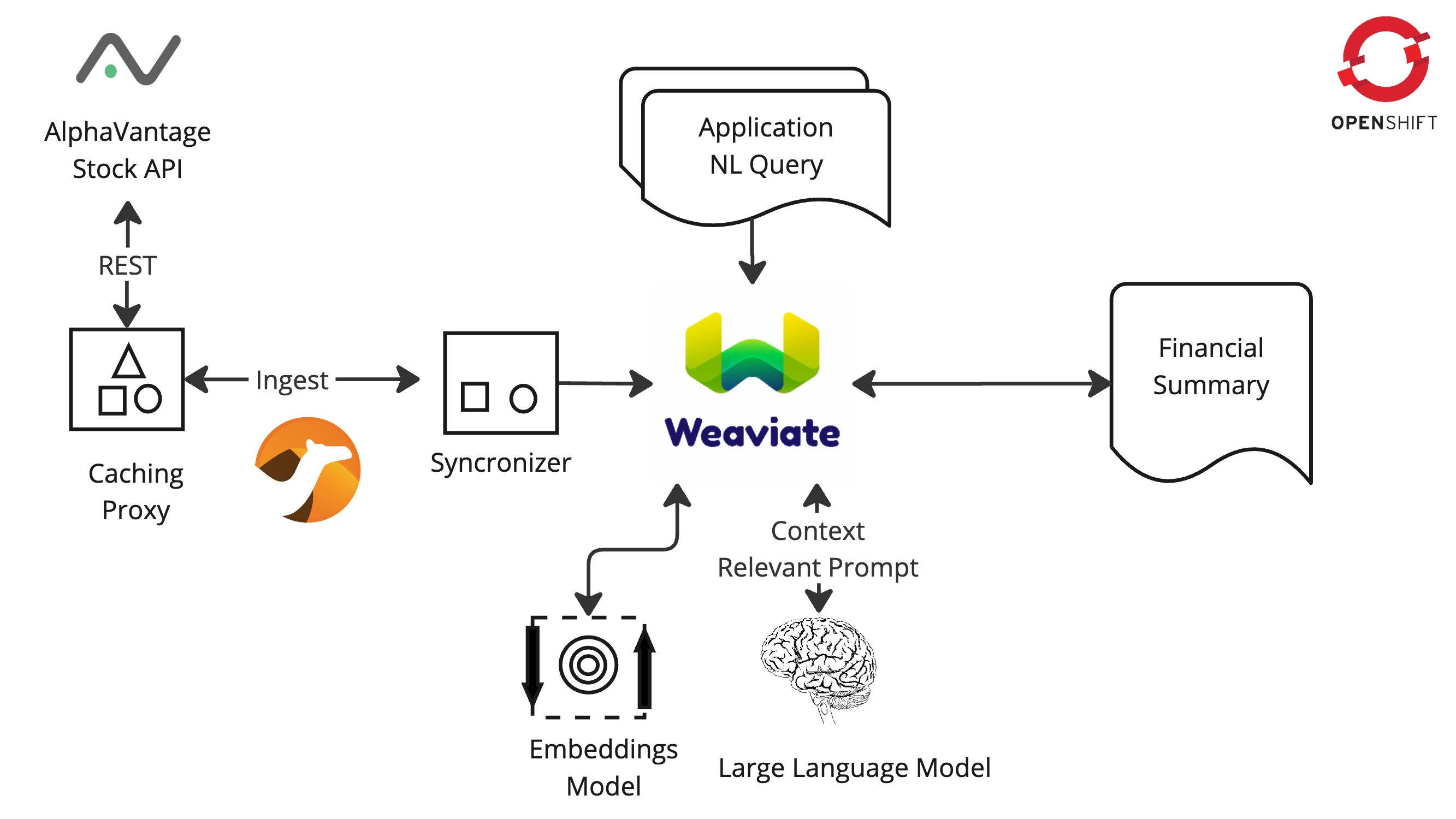

Summarization with Retreival Augmented Generation (RAG) AI workflow

Architecture

1. Common Challenges

This solution pattern address the following challenges:

-

Integrating private knowledge into a generative AI workflow.

-

Capturing and syncronizing near real-time stock exchange data makes periodic fine tuning of LLMs prohibitive.

-

Exposing private data sources via externally hosted API services Hosting AI services raises security and privacy concerns for most enterprises.

2. Technology Stack

-

Red Hat supported products:

-

Red Hat Application Foundation

-

Red Hat build of Apache Camel (included with Red Hat Application Foundation)

-

-

Other open source products:

3. An in-depth look at the solution’s architecture

A closer look at the services and components that make up this solution pattern.

3.1. Data Flow

3.1.1. Ingest Engine

Data flow begins at the source with stock information made available via a RESTful API service hosted by Alpha Vantage. The ingest engine consisting of two Camel services takes care of keeping data fresh in the Weaviate vector database. The ingest processes are highly configurable and perform filtering and discarding of invalid or null data. As stock symbol information is read in, vector embeddings are generated and stored in the Weaviate vector database along with the financial entities for each stock symbol. An example json record is available in the AlphaVantage API documentation.

3.1.2. Vector Database

The vector database used is Weaviate. Weaviate is a highly performant and scalable open-source vector database that simplifies the development of AI applications. Built-in vector and hybrid search, easy-to-connect machine learning models, and a focus on data privacy enable developers of all levels to build, iterate, and scale AI capabilities faster.

3.1.3. Machine Learning (ML) models

This solution pattern makes use of two ML models, an enbeddings model (all-minilm) and an LLM (granite3-dense:8b)

which is configurable at run time. The enbeddings model is used to generate vector embeddings for each stock symbol and

the LLM summarizes the stock information. Granite a series of LLMs developed by IBM, specifically designed

for enterprise applications, focusing on business use cases like code generation, summarization, and classification,

with a strong emphasis on security and data privacy, all while being open-source under the Apache 2.0 license.

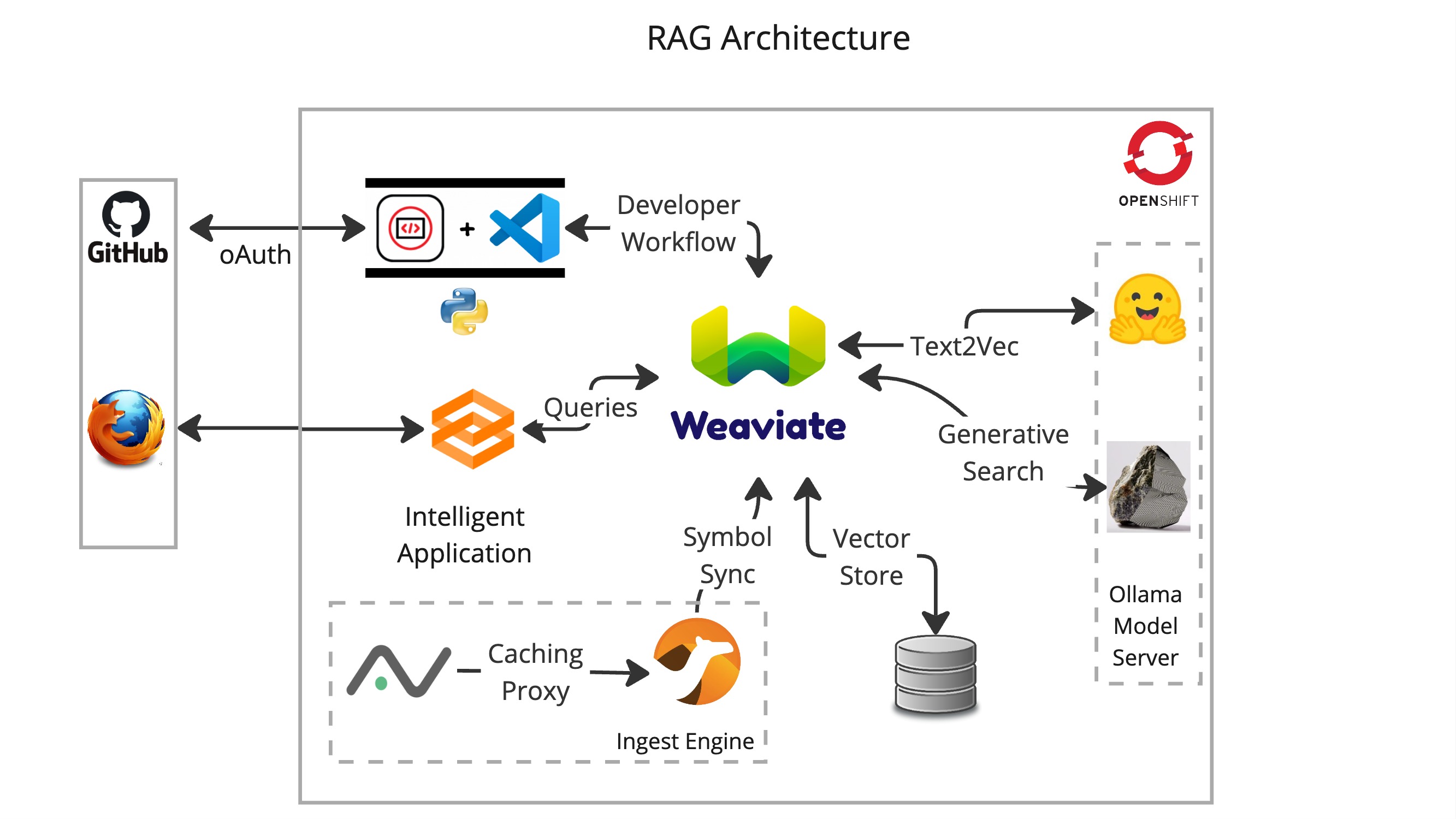

3.2. Architecture

3.2.1. Ingest Engine

The ingest engine consists of a caching proxy and syncronizer services. The caching proxy is a Camel service that caches the stock information from the Alpha Vantage API and the syncronizer service is a Camel service that synchronizes the data with the Weaviate vector database. The ingest engine is deployed on Openshift using a standard source-to-image workflow. Details can be found in the caching proxy and syncronizer repositories.

3.2.2. Weaviate vector database

The Weaviate vector database is installed using the helm installer which allows for enterprise features to be enabled including API key token authentication and data sharding. Weaviate’s cloud-native design supports horizontal scaling and efficient resource consumption, allowing it to handle large volumes of data and user requests easily. Interested readers are encouraged to find out more about the benefits of hosting Weaviate on Openshift.

3.2.3. Ollama Model Server

Ollama is a popular and easy to use platform to host and serve LLMs. It supports a number of operating systems and provides good integration and compatibility with the open source ecosystem including Weaviate. Ollama is deployed on Openshift using a standard source-to-image workflow. Details can be found in this repository.

3.2.4. User Interface

The UI is written in Python using the Gradio framework and is deployed using Openshift source-to-image workflow. Example queries are presented to the user as pushbuttons to perform semantic and generative queries. Users may also enter custom queries and prompts.

3.2.5. Developer IDE

Provided by Red Hat Openshift DevSpaces, this IDE allows developers to work with the codebase and test their changes in a live environment.