Solution Pattern: Event Driven API Management

Architecture

1. Common Challenges

The architecture team has identified a set of non-functional requirements for building a reliable webhook delivery system:-

1.1. Security:

Webhook messages are sent to the consumers public URLs that can be accessed by any unauthorized person. API consumers cannot verify that they are receiving a webhook from a trusted API provider. Therefore, we need an authentication strategy to trust the incoming webhook notifications. One of the most secure approaches is to use a Webhook Signature, which works by :

-

A secret key is known by both the webhook producer(webhook delivery system) and consumer.

-

The webhook producer uses this key with a cryptographic algorithm like HMAC SHA-256 to create a signature of the webhook payload, which is sent in the header along with the webhook request.

-

When receiving the webhook request, the consumer will perform the same signing approach and compare the result with the request signature header.

In addition to the authentication approach, we require that all consumer webhook endpoint URLs use HTTPS to ensure privacy and data integrity.

1.2. Scalability and performance:

-

The Webhook delivery system must be able to manage a growing number of webhooks and handle high throughput of messages by using horizontal scaling resources up or down as needed.

-

For cost efficiency and resource optimization, the capability to 'scale to zero' is required. This is a key feature of serverless computing architectures, where application components can automatically scale down to zero instances when not in use.

-

The Webhook delivery system must be optimized for fast message processing and delivery to meet real-time or near-real-time requirements.

-

The Webhook delivery system must use a caching component for caching any required remote calls for better performance and throughput.

1.3. Support of Retry:

The webhook delivery system must manage the retry of failed messages (e.g., due to the consumer receiving server being down or returning any response other than 2xx) by applying an exponential backoff policy to avoid overwhelming the receiving consumer server.

This policy increases the interval between retry attempts exponentially, which helps to avoid overwhelming the destination consumer during outages and provides a more efficient approach to managing retries over time.

The equation to calculate exponential back-off retries:- {interval} + {retry_count} ^ 4

For example, if you configure the maximum retry attempts to 5 and the interval to 60 seconds, the calculated intervals for every retry would be as below table :

Retry Count |

Next Retry in seconds |

Total Seconds Elapsed |

1 2 3 4 5 |

61 76 141 361 685 |

61 137 278 594 1,279 |

When all retry attempts fail, the system shall use a dead-letter queue for undeliverable messages.

The retry requirements necessitate a message broker with the capability of persistently scheduling messages.

1.4. Avoiding duplicate messages:

Since webhook messages may be delivered more than once, which may cause unexpected behavior in consumer applications, the most common approach to avoid this is by using a unique idempotency key for each event (e.g., in the order-created event, the order ID can be used). This allows the consumer to track processed keys and avoid reprocessing duplicates using the idempotent consumer pattern.

1.5. API Management requirements:

The business team has the following requirements related to API Management:-

-

Provide different subscription plans for the order-created event. The free plan does not require any payment and has a limitation of 100 webhook notifications per hour, while the paid plan offers an unlimited number of webhook notifications with monthly subscription fees.

-

Monitor and analyze consumer webhook usage patterns to identify clients that exceed their rate limits.

-

Socialize event-driven APIs through a developer portal, showing the structure of the webhook message using OpenAPI specification.

1.6. Other requirements:

-

The webhook delivery system should be deployed as cloud-native microservices.

-

The webhook delivery system should employ cloud-native configuration management to effectively manage settings, parameters, and policies.

-

The webhook delivery system should be capable of integrating with various message brokers, caching components, and the 3scale API Management Admin REST API.

-

The webhook delivery system should be configured to work with any Kafka topic and support payloads in multiple formats, such as JSON, XML, and Avro. It should also be provisioned and configured with any event-driven 3scale API product.

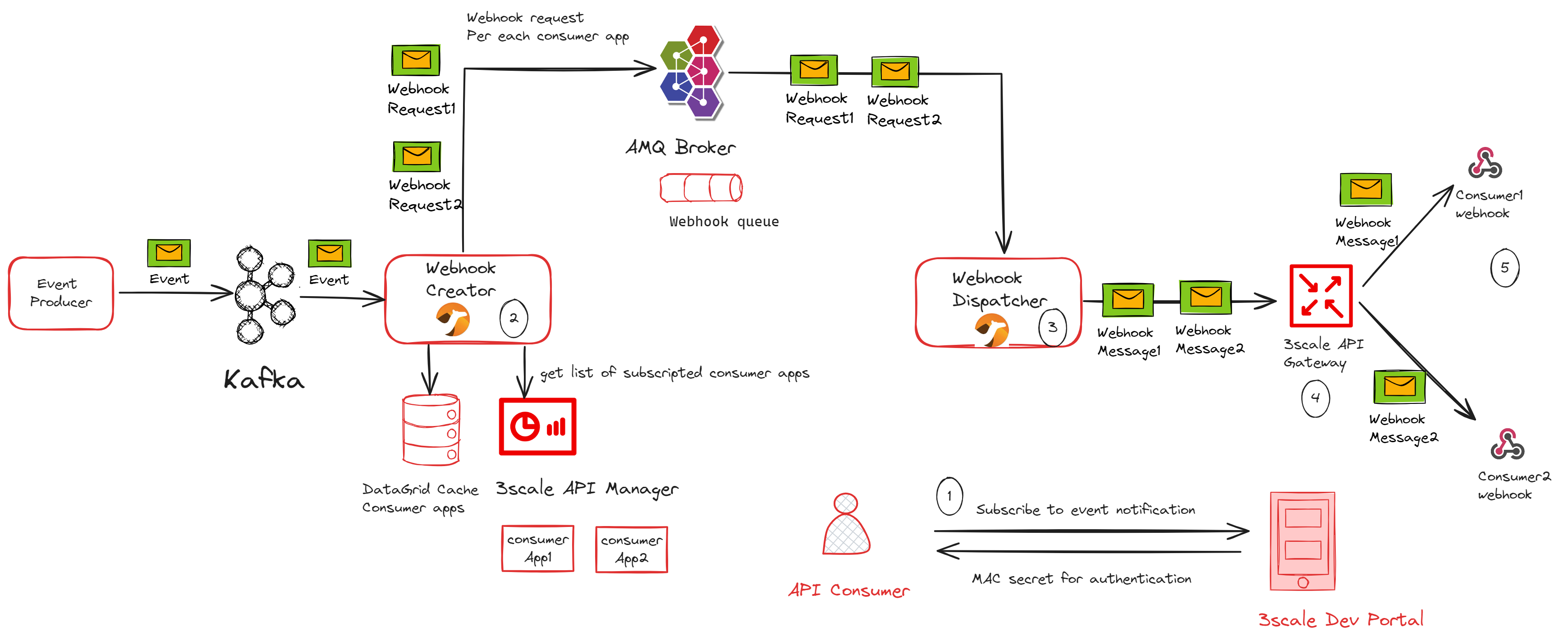

3. An in-depth look at the solution’s architecture

-

API consumers subscribe to an application plan of the order-created event API product through the developer portal, providing their webhook endpoint. Upon successful subscription, the API consumer will receive a MAC secret that can be used for authenticating incoming webhook callbacks.

-

The Webhook Creator microservice listens to the “order-created-event” topic in the Kafka cluster and produces multiple webhook requests for each subscribed consumer application in the API product by using the 3scale Admin REST API. Each webhook request will be inserted in the “webhookQueue” queue in the AMQ broker for each webhook consumer which contains the payload of the Kafka message and other header parameters like consumer webhook endpoint, the retry count, and MAC secret.

-

The Webhook Dispatcher microservice listens to the “webhookQueue” queue in the AMQ broker cluster and creates an HTTP request to the 3scale API gateway containing the payload of the webhook. It is also responsible for implementing the retry mechanism for failed messages using an exponential backoff policy.

-

When the request comes in the API gateway, a custom policy will calculate the HMAC signature header and route webhook messages to the consumer’s webhook endpoint.

-

The consumer webhook receives the request, validates the HMAC header using the shared MAC secret through the developer portal, and processes the message accordingly.

|

The API Gateway serves as the single point of egress for external traffic, securing, routing, imposing rate limits, monetizing, and monitoring webhook calls from the API provider to consumer webhooks. |

3.1. Architectural design decisions

The below section describes the architectural design decisions that helped the Globex team achieve non-functional requirements

3.1.1. Implementation Framework

Camel Quarkus is the implementation framework for the webhook delivery components, which is a framework that combines the capabilities of Apache Camel and Quarkus to facilitate the integration and development of microservices and cloud-native applications. It leverages the strengths of both platforms to provide a highly efficient runtime for integration tasks.

3.1.2. Security

The HMAC SHA-256 algorithm is selected as the authentication method to ensure that webhook consumers can trust the incoming notifications. This cryptographic hash function provides data integrity and authenticity. The 3scale custom policy is used to calculate the HMAC header using the Camel service policy extension implemented using Camel Quarkus.

3.1.3. Scalability

KEDA (Kubernetes Event-Driven Autoscaling) is chosen for scaling the webhook creator and dispatcher services. KEDA facilitates more efficient resource usage in Kubernetes environments by allowing services to scale based on actual demand driven by events.

The webhook creator service scales based on the number of messages in the 'order-created-event' Kafka topic. The Maximum number of replicas that can be achieved is equal to the number of partitions you have in the Kafka topic "order-created-event".This limitation stems from Kafka’s architecture, where each partition in a Kafka topic can be consumed by only one consumer from a consumer group at any given time. The webhook Dispatcher service is scaled using the number of messages on AMQ Broker queue “webhookQueue”.

The architecture team has chosen AMQ Broker over Kafka for the dispatcher service, as it is specifically optimized for low-latency message delivery. Unlike Kafka, AMQ Broker does not face limitations with concurrent consumers; it can dynamically scale to an unlimited number of dispatcher instances, thus enhancing throughput and reducing latency.

Webhook Creator and Dispatcher services are implemented using Camel Quarkus which allows integration with KEDA using traits with the ability to define scaling to zero rules.

Data Grid cache is used in the webhook creator service to cache consumer applications from 3scale API for better scalability and performance.

3.1.4. Retry policy

The Dispatcher service uses an exponential backoff policy allowing the DevOps team to manage configuration parameters of the policy in OpenShift using secrets. AMQ Broker delayed message feature is used to schedule the retry of failed webhook delivery.

3.1.5. Avoiding duplicate messages

The webhook creator service uses Idempotent consumer EIP to filter out the duplicate messages using the infinispan/Data Grid repository for better scalability. For the 'order-created' event, the idempotent key is the order ID, which producers communicate as a Kafka message header 'idempotentKey' rather than in the message body. This approach allows the webhook delivery system to remain payload-agnostic.