Solution Pattern: Solution Patterns - Optimizing Traffic and Observability with OpenShift Service Mesh 3

Architecture

1. Common Challenges addressed

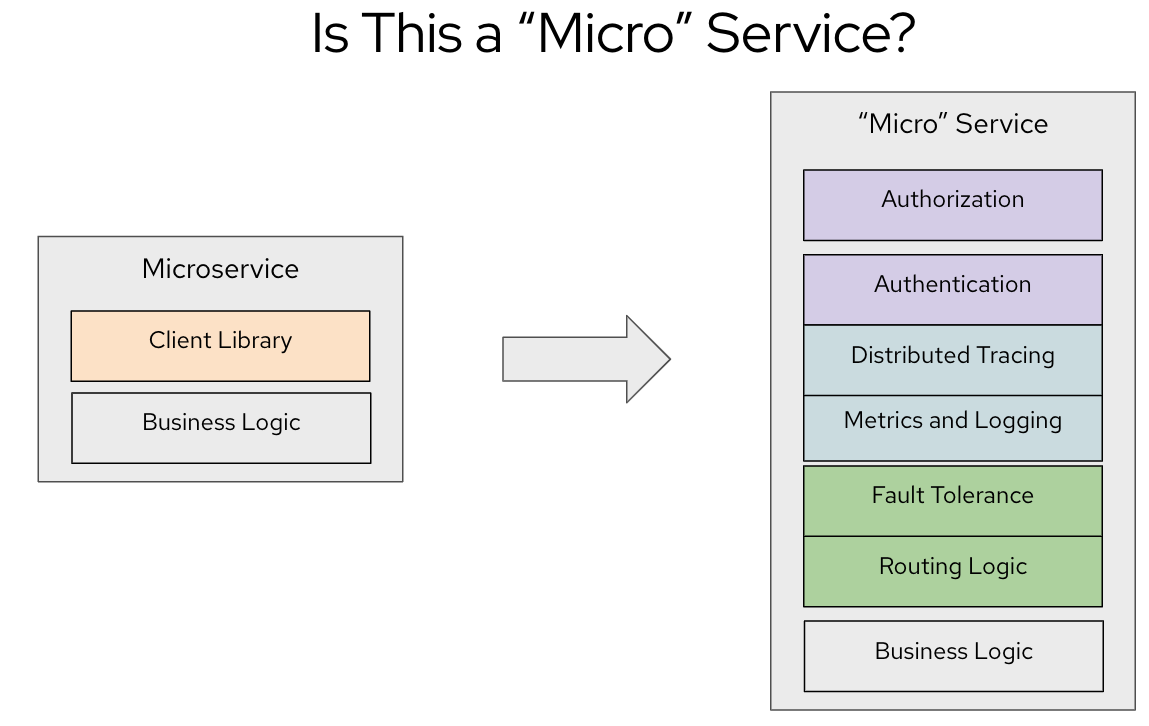

In modern application architectures based on containers and Kubernetes, developers typically aim to focus on delivering the core business logic of the services they build. However, for these services to comply with organizational requirements such as security, monitoring, high-availability networking, and other operational needs, significant additional overhead is required. These operational concerns often involve tasks unrelated to the core function of the service, such as enforcing security policies, managing traffic flows, enabling service-to-service communication, and ensuring observability.

This leads to a situation where a microservice must not only handle its business logic but also embed capabilities like traffic management, resilience, and telemetry—all of which add complexity and dilute the agility that microservices architectures are meant to provide.

Now imagine having to custom-build and manage this operational overhead for every single microservice in an application, especially when these services are written in various programming languages and frameworks. The lack of standardization across the stack results in:

-

Inconsistent Security Policies: Different teams may implement varying levels of security depending on their tools and knowledge, leading to vulnerabilities.

-

Limited Observability: Without a unified approach to logging, metrics, and tracing, troubleshooting and performance optimization become tedious and time-consuming.

-

Inefficient Traffic Management: Developers may struggle to implement traffic control mechanisms like retries, load balancing, or circuit breaking without a standardized solution.

-

Decreased Developer Productivity: Engineers spend more time on operational concerns than on delivering business value, reducing overall velocity.

-

Loss of Agility in Microservices: The promised agility of microservices gets undermined by the fragmented, ad-hoc implementation of cross-cutting concerns.

1.1. How OpenShift Service Mesh Solves These Challenges

OpenShift Service Mesh addresses these challenges by providing a platform-native, unified solution that abstracts away the operational complexities of microservices architectures. It allows developers to focus solely on the business logic of their services while enabling platform teams to:

-

Enforce Consistent Security Policies: Mutual TLS (mTLS) encryption and fine-grained access controls are implemented out of the box, ensuring all services adhere to a uniform security baseline.

-

Enable Seamless Observability: Built-in platform tools like Distributed Tracing, Kiali, and Prometheus provide centralized tracing, visualization, and monitoring, giving teams actionable insights across the service mesh.

-

Streamline Traffic Management: Intelligent traffic routing, load balancing, and support for advanced deployment strategies (e.g., canary releases) simplify managing and optimizing service-to-service communication.

-

Enhance Reliability and Resilience: Features like automatic retries, circuit breakers, and failover mechanisms ensure high availability even under challenging network conditions.

-

Support Kubernetes-Native Standards: OpenShift Service Mesh 3’s support for the Kubernetes Gateway API allows for modern, scalable management of ingress and egress traffic across clusters.

With few or no service code changes.

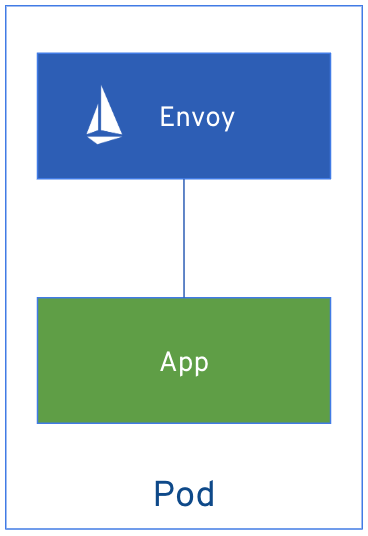

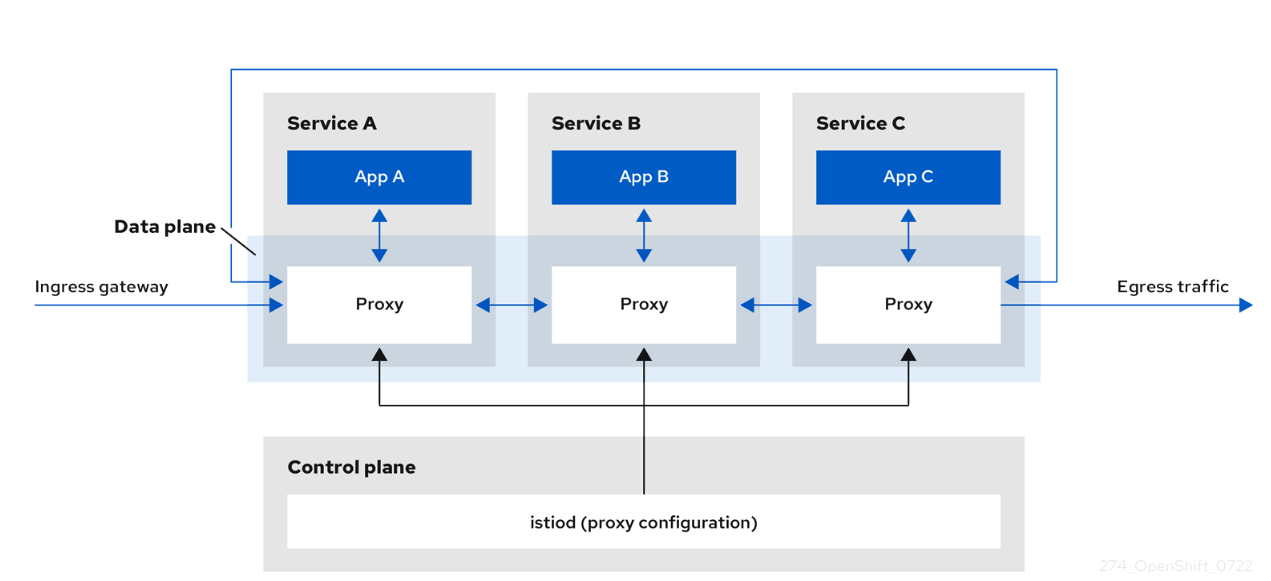

OpenShift Service Mesh uses a proxy container (Envoy) that is injected into a pod to intercept and manage all network traffic for your applications. This proxy allows you to enable powerful features, like traffic control, security, and monitoring, based on the settings you define in the Service Mesh control plane in a way that is decoupled from the application code.

The control plane takes your desired configuration, and its view of the services, and dynamically manages the proxy mesh, updating them as the rules or the environment changes.

The data plane is the communication between services within the mesh itself.

2. Technology Stack used in this Solution Pattern

|

|

|

|

|

-

Red Hat supported products

-

-

Red Hat OpenShift Container Platorm includes services to support Service Mesh

-

-

-

Other open source products:

3. An in-depth look at the solution’s architecture

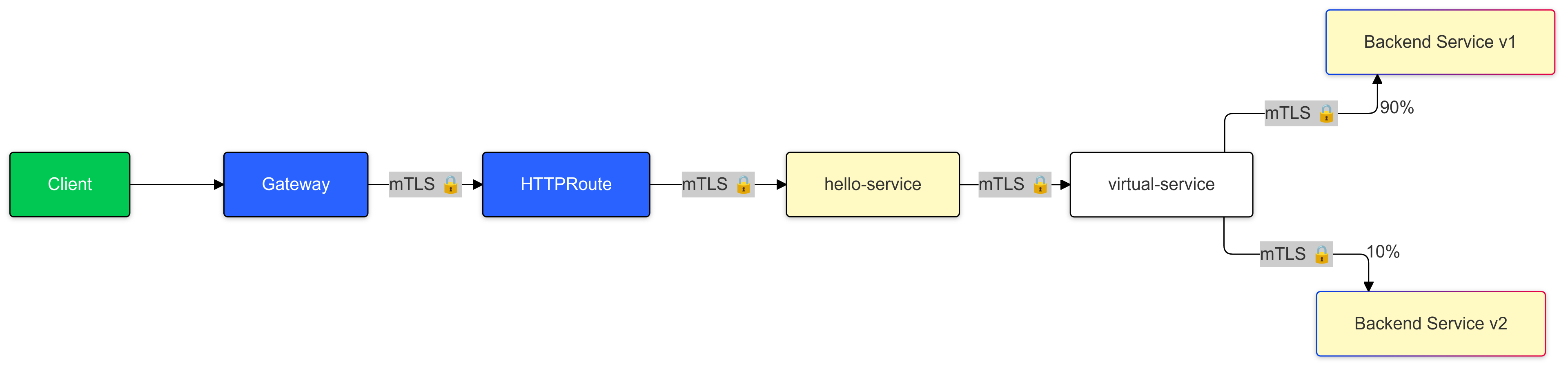

OpenShift Service Mesh 3, leveraging the Kubernetes Gateway API, provides a Kubernetes-native and efficient approach to managing cluster ingress and canary deployments. Here’s how it addresses the challenges:

3.1. Traffic Splitting with Virtual Services:

-

The application or operations team defines a Virtual Service within OpenShift Service Mesh to split traffic dynamically between

v1andv2of the back-end-service. Initially, 90% of traffic is routed tov1, while 10% is routed tov2. -

This setup ensures that most users experience the stable

v1whilev2is tested under real-world conditions with a small subset of traffic.

3.2. Real-Time Observability:

-

Integrated tools like Distributed Tracing and Kiali enable the team to visualize request flows and monitor key performance indicators (KPIs) such as latency, error rates, and success rates for

v2of the back-end-service. -

The Red Hat build of OpenTelemetry provides detailed traces of user requests, helping identify performance bottlenecks or errors in

v2.

3.3. Progressive Rollout:

-

Once the team confirms that

v2is stable, they gradually increase the traffic percentage directed to it—e.g., moving to 50/50 and eventually 100% forv2. -

If issues are detected, traffic can be instantly shifted back to

v1of the back-end-service using the Virtual Service configuration, ensuring no user impact.

3.4. Secure Communication with mTLS:

-

All service-to-service communication between

v1,v2, and other dependent services is encrypted by default with mutual TLS (mTLS). This ensures that sensitive customer data remains protected throughout the deployment process.

3.5. Platform Integration:

-

Since OpenShift Service Mesh is included with the OpenShift Container Platform subscription, the team can leverage enterprise support and seamless integration with OpenShift Observability, minimizing operational overhead.

4. About the Technology Stack

OpenShift Service Mesh 3 is built on a modular, Kubernetes-native architecture designed to address the complexities of managing microservices communication, security, traffic management, and observability. It integrates tightly with Red Hat OpenShift, leveraging Kubernetes-native APIs and tools.

4.1. Core Components

-

Istio

-

Purpose: Core of the service mesh, responsible for service-to-service communication, traffic management, and policy enforcement.

-

Key Features:

-

Intelligent traffic routing (e.g., canary deployments, mirroring).

-

Mutual TLS (mTLS) for secure communications.

-

Resiliency features like retries, circuit breaking, and failover.

-

-

Deployment: Control plane components (

istiod,istio-cni) run in dedicated namespaces (istio-system,istio-cni).

-

-

Gateway (Gateway API)

-

** Purpose: Handle external traffic entering the mesh and secure egress traffic leaving the mesh.

-

Describes how traffic can be translated to Services within the cluster

-

Key Features:

-

Centralized traffic entry/exit point.

-

Policy enforcement and telemetry collection.

-

-

Can express capabilities like HTTP header manipulation, traffic weighting & mirroring, TCP/UDP routing

-

May be attached to one or more Route references which serve to direct traffic for a subset of traffic to a specific service

-

Uses Istio for ingress traffic management.

-

-

HTTPRoute (Gateway API)

-

Enables advanced routing capabilities for service ingress.

-

Specifies routing behavior of HTTP requests from a Gateway listener to an kubernetes

Serviceresource associated with a pod. -

Each Route includes a way to reference the parent resources it wants to attach to.

-

-

Distributed Tracing (via Tempo)

-

Purpose: Distributed tracing for monitoring and debugging service interactions.

-

Key Features:

-

Visualize and trace request flows across services.

-

Identify performance bottlenecks and latency issues.

-

-

Deployment: Deployed as part of the OpenShift Service Mesh stack in a dedicated namespace (

tracing-system).

-

-

Kiali

-

Purpose: Visualization and management tool for the service mesh.

-

Key Features:

-

Graphical representation of service communication.

-

Real-time traffic monitoring and health metrics.

-

Istio configuration

-

OSSM Plugin for OpenShift Web Console

-

-

Deployment: Runs in the same namespace as the control plane or a dedicated namespace (

istio-system).

-

-

OpenShift Observability

-

Purpose: Metrics collection and visualization.

-

Key Features:

-

Collect and store time-series data for service mesh metrics.

-

Provide dashboards in the OpenShift Web console for performance and health monitoring.

-

Utilizes

SystemMonitorandPodMonitorCRDs to gather Service Mesh controle plane and namespace-level metrics

-

-

-

Ingress/Egress Gateways

-

Purpose: Handle external traffic entering the mesh and secure egress traffic leaving the mesh.

-

Key Features:

-

Centralized traffic entry/exit point.

-

Policy enforcement and telemetry collection.

-

-

4.2. Key Architectural Decisions

-

Kubernetes Gateway API Support

-

OpenShift Service Mesh 3 incorporates the Kubernetes Gateway API to modernize ingress and egress traffic management. This provides better scalability and integration compared to legacy Service Mesh (OSSM 2.x) ingress/egress configurations.

-

-

Centralized Observability

-

By integrating Distributed Tracing, Kiali, and OpenShift Observability, OSSM provides a unified observability stack, reducing the need for separate tooling and ensuring consistent monitoring.

-

-

mTLS by Default

-

All service-to-service communications within the mesh are secured using mutual TLS, meeting enterprise-grade security requirements out of the box.

-