Solution Patterns: Optimizing Traffic and Observability with OpenShift Service Mesh 3

Red Hat OpenShift Service Mesh adds a transparent layer on existing distributed applications without requiring any changes to the application code. The mesh introduces an easy way to create a network of deployed services that provides discovery, load balancing, service-to-service authentication, failure recovery, metrics, and monitoring. A service mesh also provides more complex operational functionality, including A/B testing, canary releases, access control, and end-to-end authentication.

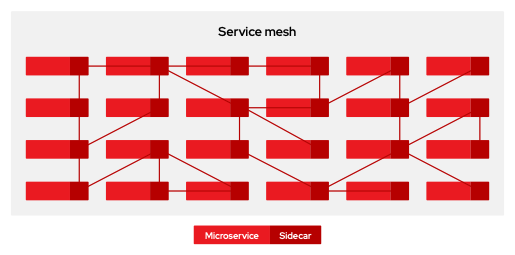

Microservice architectures split the work of enterprise applications into modular services (deployed as pods in Kuberntetes), which can make scaling and maintenance easier. However, as an enterprise application built on a microservice architecture grows in size and complexity, it can potentially become difficult to understand and manage. Service Mesh can address those architecture problems by capturing or intercepting traffic between services and can modify, redirect, or create new requests to other services in a way that is independant from business logic.

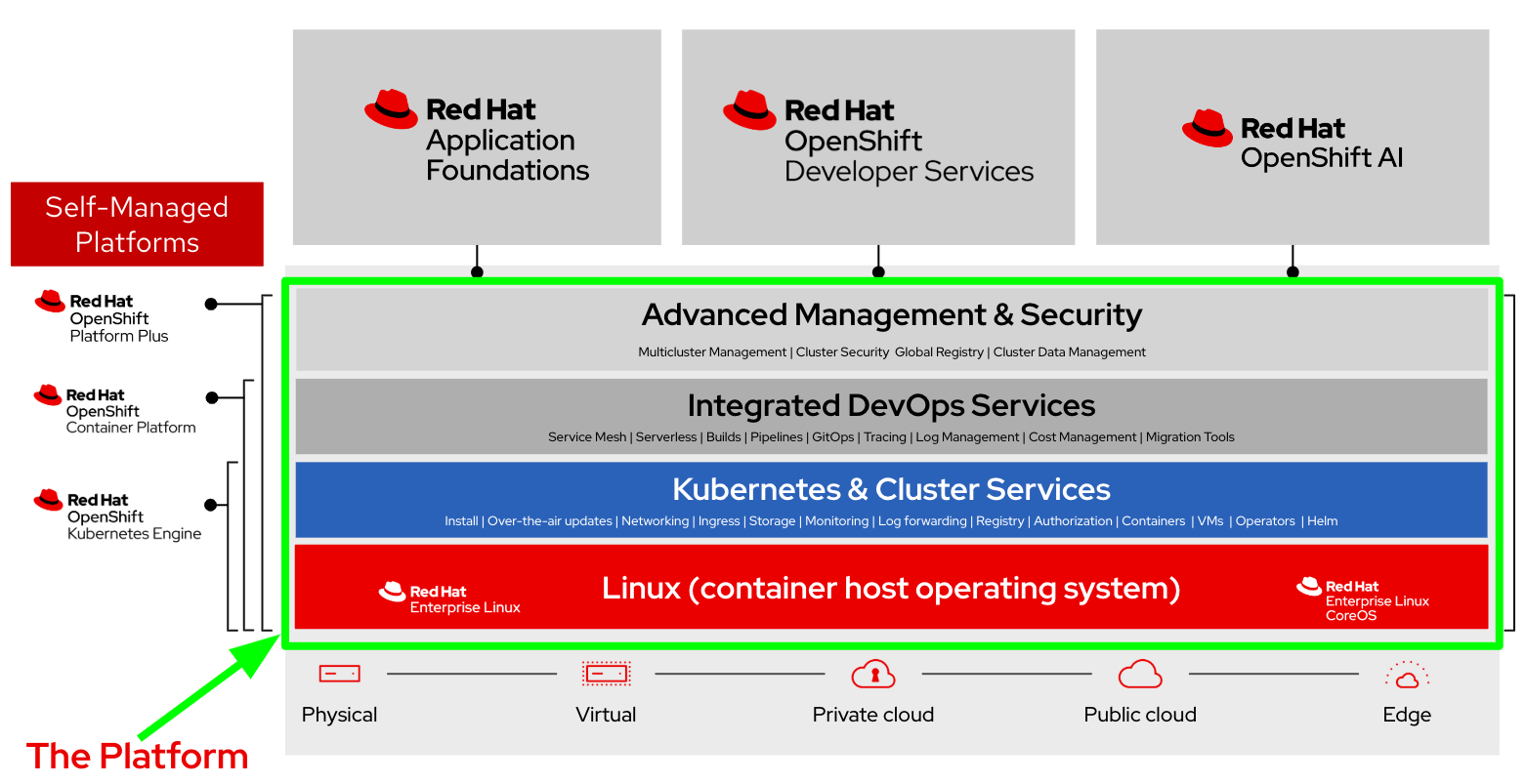

Included with OpenShift Container Platform Subscription

A key benefit of OpenShift Service Mesh is that it, along with most of its critical dependencies (e.g., Istio, Jaeger, Kiali, Prometheus), is included and fully supported with the OpenShift Container Platform subscription. This integration ensures:

-

Enterprise Support: Comprehensive support from Red Hat for both OpenShift Service Mesh and its dependencies.

-

Seamless Upgrades: Coordinated updates with the OpenShift platform, ensuring compatibility and reducing operational overhead.

-

Cost Efficiency: Organizations can leverage advanced microservices management capabilities without incurring additional licensing or support costs.

By standardizing these capabilities at the platform level and bundling them into the OpenShift subscription, OpenShift Service Mesh enables teams to build, scale, and manage microservices architectures with minimal complexity and maximum support. This allows developers to return their focus to innovation and delivering business value.

This solution pattern demonstrates how OpenShift Service Mesh 3 empowers platform teams to manage traffic flows with precision, including showcasing a canary deployment for safe, incremental rollouts. Additionally, it highlights how observability insights help detect and resolve issues quickly, ensuring reliability and resiliency across hybrid cloud environments.

|

Support for Gateway API became generally available in OpenShift Service Mesh 2.6, supporting the Kubernetes Gateway API version 1.0.0. This functionality is exclusive to Red Hat OpenShift Service Mesh, and the Gateway API custom resource definitions (CRDs) are not supported outside this context. The use of Kubernetes Gateway API requires custom resource definitions (CRDs) that are not installed with OpenShift Container Platform 4.18 and earlier releases by default, but are installed as part of this Solution Pattern. |

Contributors: Leon Levy - Red Hat OpenShift Specialist Solution Architect

|

Solutions Patterns help you understand the art of the possible with Red Hat’s portfolio, and not intended to be used as is for production environments. You are welcome use any part of this solution pattern for your own workloads. |

1. Use cases

Common use cases that can be address with this architecture are:

-

Traffic Management: Control the flow of traffic and API calls between services, make calls more reliable, and make the network more robust in the face of adverse conditions.

-

Service Identity and Security: Provide services in the mesh with a verifiable identity and provide the ability to protect service traffic as it flows over networks of varying degrees of trustworthiness.

-

Policy Enforcement: Apply organizational policy to the interaction between services, ensure access policies are enforced and resources are fairly distributed among consumers. Policy changes are made by configuring the mesh, not by changing application code.

-

Telemetry: Gain understanding of the dependencies between services and the nature and flow of traffic between them, providing the ability to quickly identify issues.

2. The story behind this solution pattern

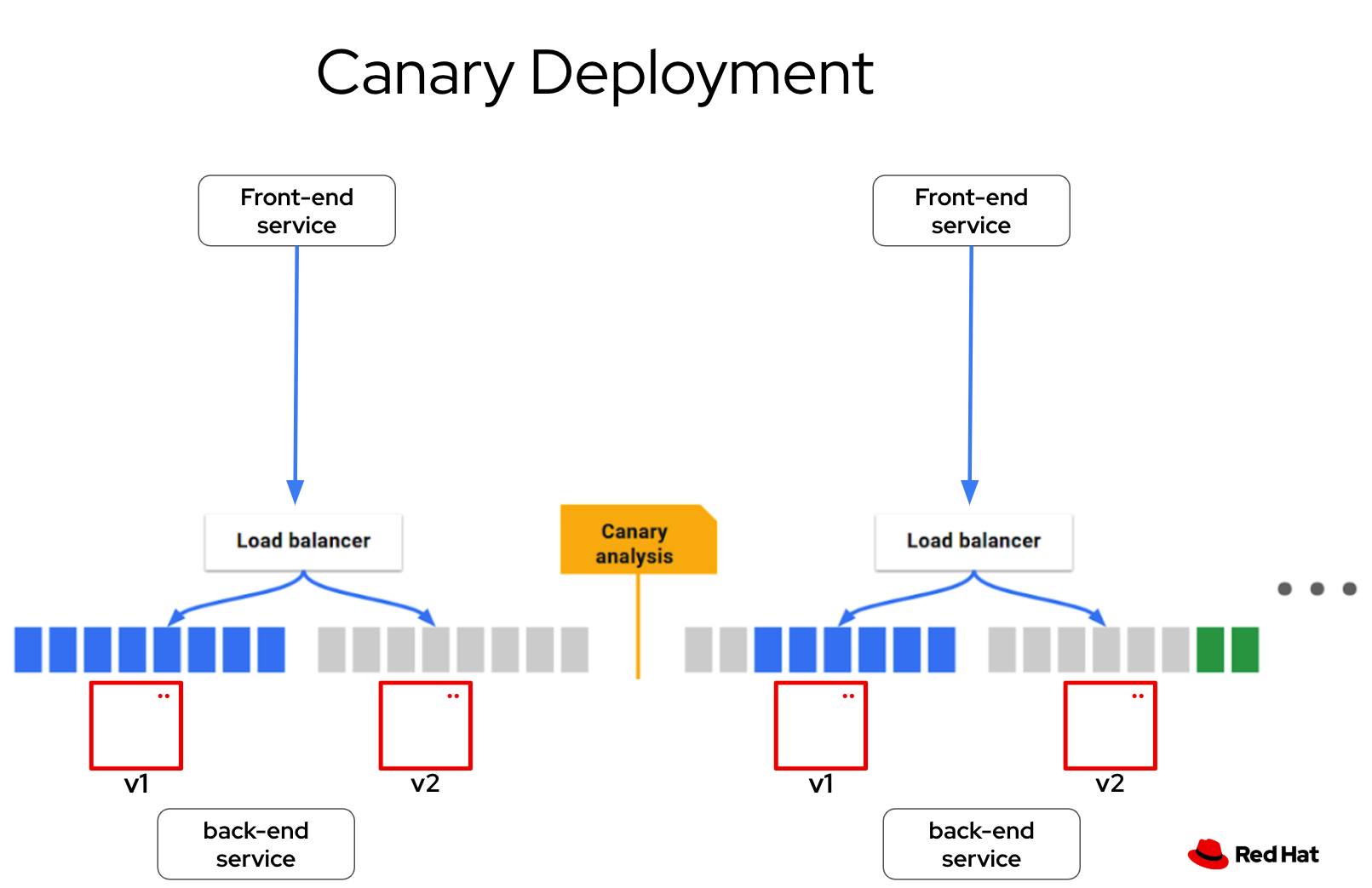

Canary Deployment with Traffic Splitting

Objective: Safely roll out a new version of a microservice while monitoring its performance.

A retail company has an application running on OpenShift that handles online orders. The development team wants to deploy a new version (v2) of their hello-service RestAPI without disrupting users of the current version (v1).

3. The Solution

Using OpenShift Service Mesh 3 with the Kubernetes Gateway API, they configure a traffic-splitting policy to direct 90% of traffic to v1 and 10% to v2.

With integrated observability tools like Jaeger and Kiali, the team monitors traffic flow, latency, and error rates in real-time for v2.

Once performance metrics confirm stability, they incrementally increase traffic to v2 until it handles 100% of requests, ensuring a smooth transition.

This pattern aims to cover the following use cases of OpenShift Service Mesh

-

Traffic Control for Continuous Delivery: Enable safe progressive delivery with traffic management across application versions

-

Application Observability: Out of the box application metrics, request logs and distributed traces for system observability

All while encrypting service-to-service communication with default mTLS